Artificial Intelligence News - Page 1

Supermicro confirms NVIDIA B200 AI GPU delay: offers liquid-cooled H200 AI GPUs instead

Just before NVIDIA announced its issues with its new Blackwell AI GPUs, partner Supermicro seemingly confirmed Blackwell B200 AI GPUs being delayed, offering its customers liquid-cooled Hopper H200 AI GPUs in their place.

Supermicro CEO Charles Liang said that the possible delay of NVIDIA's new Blackwell GPUs for AI and HPC systems will not have a dramatic impact on AI server makers, or the AI server market. Liang said: "We heard NVIDIA may have some delay, and we treat that as a normal possibility".

Liang continued: "When they introduce a new technology, new product, [there is always a chance] there will be a push out a little bit. In this case, it pushed out a little bit. But to us, I believe we have no problem to provide the customer with a new solution like H200 liquid cooling. We have a lot of customers like that. So, although we hope better deploy in the schedule, that's good for a technology company, but this push out overall impact to us. It should be not too much".

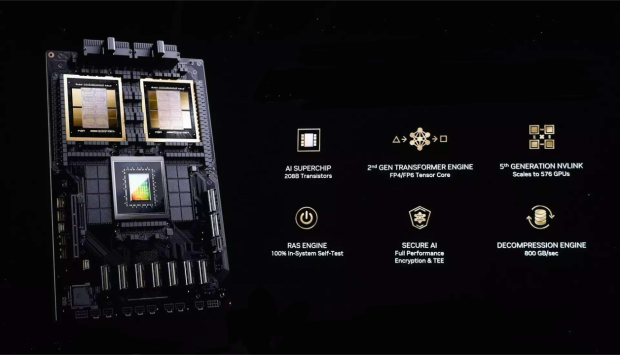

NVIDIA says it will tweak Blackwell AI GPUs, issues with the 'GPU mask' needing B200 re-spin

Yep, NVIDIA has admitted it has had issues with its new Blackwell AI GPUs that are causing low yields, forcing the company to re-spin some of the layers of its new B200 AI GPU to boost yields.

NVIDIA said in a statement: "We executed a change to the Blackwell GPU mask to improve production yield. Blackwell production ramp is scheduled to begin in the fourth quarter and continue into fiscal 2026. In the fourth quarter, we expect to ship several billion dollars in Blackwell revenue".

The design flaws plaguing NVIDIA's new Blackwell AI GPUs hit headlines a couple of weeks ago, where we began hearing about design flaws that analyst firm KeyBanc says NVIDIA will need to "respin" the Blackwell tile that will cause a 3-month delay on shipments. Now these reports ring true. KeyBanc explained at the time: "Given the Blackwell delay, we believe NVIDIA will prioritize the ramp of B200 for hyperscalers and has effectively canceled B100, which will be replaced with a lower cost/performance GPU (B200A) targeted at enterprise customers".

AI creates a playable version of the original Doom, generating each frame in real-time

Google's research scientists have published a paper on its new GameNGen technology, an AI game engine that generates each new frame in real-time based on player input. It kind of sounds like Frame Generation gone mad in that everything is generated by AI, including visual effects, enemy movement, and more.

AI generating an entire game in real-time is impressive, even more so when GameNGen uses its tech to recreate a playable version of id Software's iconic Doom. This makes sense when you realize that getting Doom to run on lo-fi devices, high-tech gadgets, and even organic material is a right of passage.

Seeing it in action, you can see some of the issues when it comes to AI generating everything (random artifacts, weird animation), but it's important to remember that everything you see is being generated and built around you in real-time as you move, strafe, and fire shotgun blasts at demons.

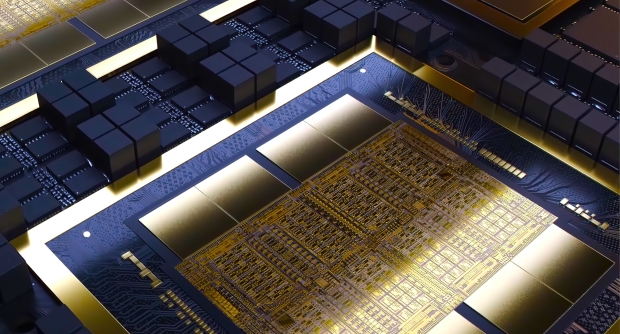

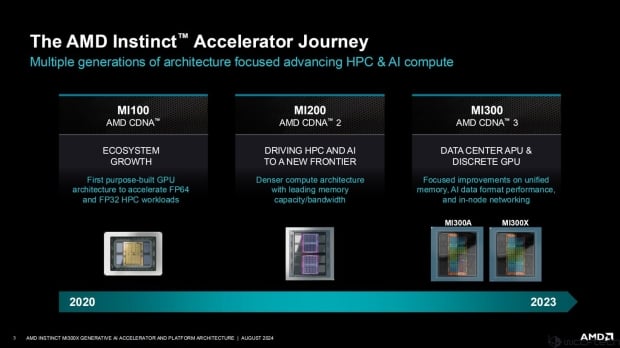

AMD details Instinct MI300X MCM GPU: 192GB of HBM3 out now, MI325X with 288GB HBM3E in October

AMD's new Instinct MI300X AI accelerator with 192GB of HBM3E has had a deep dive at Hot Chips 2024 this week, as well as the company teasing its refreshed MI325X with 288GB of HBM3E later this year.

Inside, AMD's new Instinct MI300X AI Accelerator features a total of 153 billion transistors, using a mix of TSMC's new 5nm and 6nm FinFET process nodes. There are 8 chiplets that feature 4 shared engines, and each shared engine contains 10 compute units.

The entire chip packs 32 shader engines, with a total of 40 shader engines inside of a single XCD and 320 in total across the entire package. Each individual XCD has its dedicated L2 cache, and out the outskirts of the package, features the Infinity Fabric Link, 8 HBM3 IO sites, and a single PCIe Gen5 link with 128GB/sec of bandwidth that connects the MI300X to an AMD EPYC CPU.

Intel shows off its next-gen Lunar Lake, Xeon 6, Guadi 3 chips at Hot Chips 2024

Intel has announced new details on its new Xeon 6 SoC, Lunar Lake mobile processor, and Gaudi 3 AI accelerator and its OCI chiplet at Hot Chips 2024 this week.

First off, is the new Intel 6 SoC that combines the compute chiplet from Intel Xeon 6 processors with an edge-optimized I/O chiplet built on Intel 4 process technology. This enables the Xeon 6 SoC to deliver performance boosts over previous-generation Xeon CPUs with improved power efficiency and transistor density compared to previous-gen tech.

Intel will have more details about its next-gen AI PC processor, Lunar Lake, with Arik Gihon, the lead client CPU SoC architect, to talk about the new Lunar Lake CPU and how it's designed to "set a new bar for x86 power efficiency while delivering leading core, graphics and client AI performance".

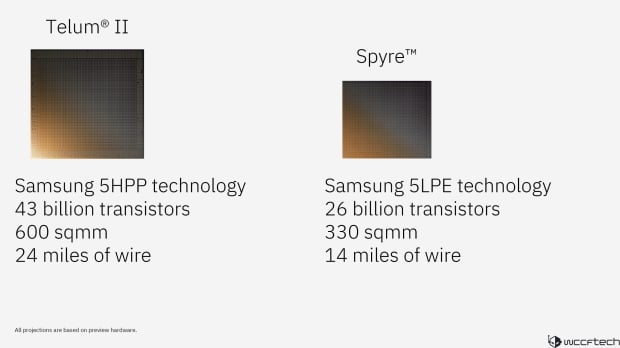

IBM unveils Telum II CPU with 8 cores at 5.5GHz, Spyre AI accelerator: 300+ TOPS, 128GB LPDDR5

IBM has just unveiled its new Telum II processor and Spyre AI accelerator, which it plans to use inside of its new IBM Z mainframe systems powering AI workloads.

The company provided details of the architecture of its new Telum II processor and Spyre AI accelerator, which are designed for AI workloads on the next-gen IBM Z mainframes. The new mainframes will accelerate traditional AI workloads, as well as LLMs using a brand new ensemble method of AI.

IBM's new Telum II processor features 8 high-performance cores running at 5.5GHz, with 36MB L2 cache per core and a 40% increase in on-chip cache capacity for a total of 360MB. The virtual level-4 cache of 2.88GB per processor drawer provides a 40% increase over the previous generation. The integrated AI accelerator allows for low-latency, high-throughput in-transaction AI inferencing, for example enhancing fraud detection during financial transactions, and provides a fourfold increase in compute capacity per chip over the previous generation.

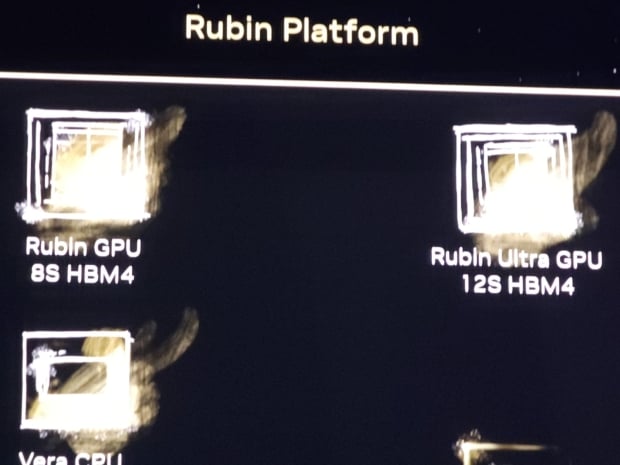

SK hynix's next-gen HBM4 tape out in October: ready for NVIDIA's future-gen Rubin R100 AI GPU

SK hynix is aiming to have its HBM4 memory tape-out in Q4 2024, ready for NVIDIA's next-gen Rubin R100 AI GPU coming in 2025.

In a new report from ZDnet, we're learning that SK hynix is nearing the final stage of commercializing its next-generation HBM4 memory, with the design drawings to be transferred to the manufacturing process, or "tape out". According to ZDnet's industry sources, SK hynix plans to complete the tape out of its HBM4 for NVIDIA in October, so we're just weeks away.

HBM4 offers a huge 40% increase in bandwidth, and a reduced power consumption of a rather incredible 70% to HBM3E, the fastest memory in the world. HBM4 density will be 1.3x higher, with all of these advancements combined, the leap in performance and efficiency is a key driver in NVIDIA's continued AI GPU dominance.

TSMC to make $31 billion in 9 months from its 3nm and 5nm process nodes alone

TSMC is expected to make over NT $1 trillion (around $31 billion USD or so) in revenue from its 3nm and 5nm process nodes, in just a span of 9 months.

DigiTimes reports that TSMC will generate around $31 billion from just two of its high-end semiconductor nodes, thanks to their unstoppable demand -- customers like Apple, AMD, NVIDIA, Intel, Qualcomm, MediaTek -- with TSMC seeing huge revenue increases for Q2 2024 to NT$336.7 billion, or around 40% of their total revenue in Q1 2023.

TSMC estimates it will generate NT $754 billion (around $23 billion USD or so) from its 3nm and 5nm process nodes in Q3 2024, with major customers in Apple and NVIDIA.

This data center AI chip roadmap shows NVIDIA will dominate far into 2027 and beyond

In a recently shared data center AI chip roadmap posted on X, we get a good look at what companies have on the market already, and what's in the AI chip pipeline through to 2027. Check it out:

The list includes chip makers NVIDIA, AMD, Intel, Google, Amazon, Microsoft, Meta, ByteDance, and Huawei. You can see the list of NVIDIA AI GPUs includes the Ampere A100 through to the Hopper H100, GH200, H200 AI GPUs, and into the Blackwell B200A, B200 Ultra, GB200 Ultra and GB200A. But after that -- which we all know is coming -- is Rubin and Rubin Ultra, both rocking next-gen HBM4 memory.

We also have AMD's growing line of Instinct MI series AI accelerators, with the MI250X through to the new MI350 and the upcoming MI400 listed in there for 2026 and beyond.

Lightweight AI - NVIDIA releases Small Language Model with industry leading accuracy

Mistral-NeMo-Minitron 8B is a "miniaturized version" of the new highly accurate Mistral NeMo 12B AI model. It is tailor-made for GPU-accelerated data centers, the cloud, and high-end workstations with NVIDIA RTX hardware. Accuracy is often sacrificed to ensure performance regarding scalable AI models; Mistral AI and NVIDIA's new Mistral-NeMo-Minitron 8B deliver the best of both worlds.

Small enough to run in real-time on a workstation or desktop rig with a high-end GeForce RTX 40 Series graphics card, with NVIDIA, noting that the 8B or 8 billion variant excels when it comes to benchmarks for AI chatbots, virtual assistant, content generation, and educational tools.

Available and packaged as an NVIDIA NIM microservice (downloadable via Hugging Face), Mistral-NeMo-Minitron 8B is currently outperforming Llama 3.1 8B and Gemma 7B in the all-important accuracy category in at least nine popular benchmarks for AI language models.