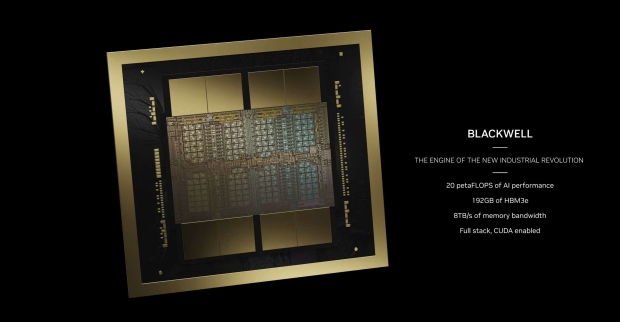

NVIDIA has just revealed its new Blackwell B200 GPU, with its new DGX B200 systems ready for the future of AI supercomputing platforms for AI model training, fine-tuning, and inference.

The new NVIDIA DGX B200 is a sixth-generation system that's air-cooled in a traditional rack-mounted DGX design used worldwide. Inside, the new Blackwell GPU architecture powers the DGX B200 system using 8 x NVIDIA Blackwell GPUs and 2 x Intel 5th Gen Xeon CPUs.

Each DGX B200 system features up to 144 petaFLOPS of AI performance, an insane 1.4TB/sec of GPU memory (HBM3E) with a bonkers 64TB/sec of memory bandwidth, driving 15x faster real-time inference for trillion-parameter models over the previous-gen Hopper GPU architecture.

Jensen Huang, founder and CEO of NVIDIA, explained: "NVIDIA DGX AI supercomputers are the factories of the AI industrial revolution. The new DGX SuperPOD combines the latest advancements in NVIDIA accelerated computing, networking and software to enable every company, industry and country to refine and generate their own AI".

- Read more: NVIDIA GB200 Grace Blackwell Superchip: 864GB HBM3E , 16TB/sec bandwidth

- Read more: NVIDIA's next-gen Blackwell AI GPU: multi-chip GPU die, 208 billion transistors, 192GB HBM3E

Inside, the new NVIDIA DGX B200 systems feature advanced networking with 8 x NVIDIA ConnectX-7 NICs and 2 x BlueField-3 DPUs, which are capable of delivering up to 400GB/sec of networking bandwidth per connection, providing the ultimate in fast AI performance with NVIDIA Quantum-2 InfiniBand and NVIDIA Spectrum-X Ethernet networking platforms.

The company says its new NVIDIA DGX SuperPOD with DGX GB200 and DGX B200 systems are expected to be available "later this year" from NVIDIA's global partners.